The only labeling + harmonization studio built for multimodal time-series with full provenance you can replay

Built for complex time-series and multimodal data — label events, transitions, and anomalies with precision and consistency.

Align, standardize, and synchronize multimodal signals into a unified timeline for accurate analysis and modeling.

Connect effortlessly with your existing data ecosystem — from databases and simulators to analytics pipelines and control systems.

Handle massive, multimodal datasets — from high-rate sensors to simulations — all within one scalable, high-performance platform.

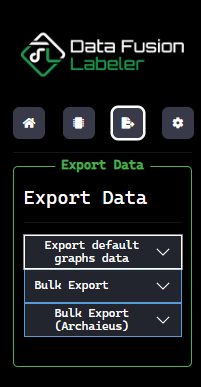

Import trimmed datasets to rapidly confirm large-scale backend processing. Visualize and verify before full-scale deployment.

Track every transformation from source to model — full lineage, versioning, and metadata for complete reproducibility.

Extend dFL with custom graphs, auto-labelers, and ingestion logic using our full-featured SDK for seamless integration.

Export harmonized, labeled datasets with full provenance — ready for seamless integration into AI and ML pipelines.

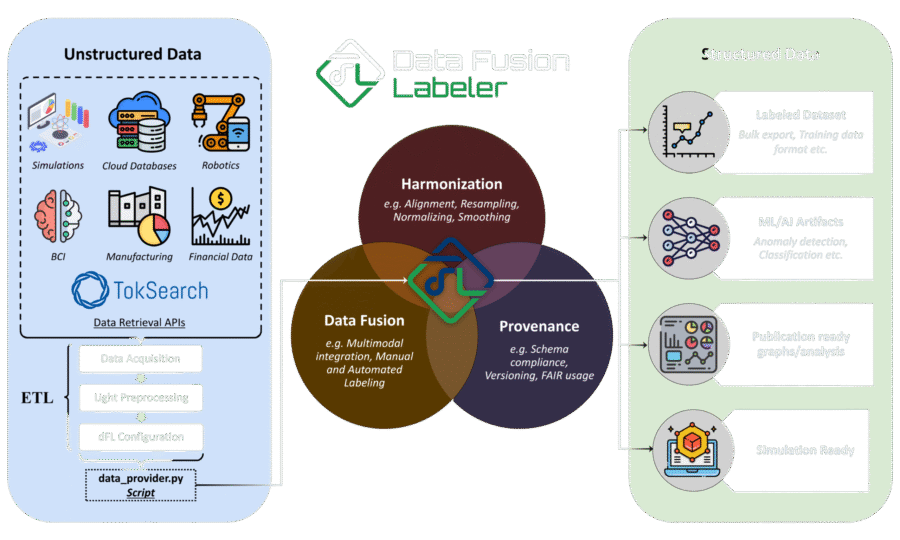

Harmonize irregular, noisy, and asynchronous signals into a unified, physically consistent timeline. dFL provides tools to align and resample multi-sensor, multi-rate data onto a common grid — enabling clean labeling, meaningful comparison, and ML-ready datasets.

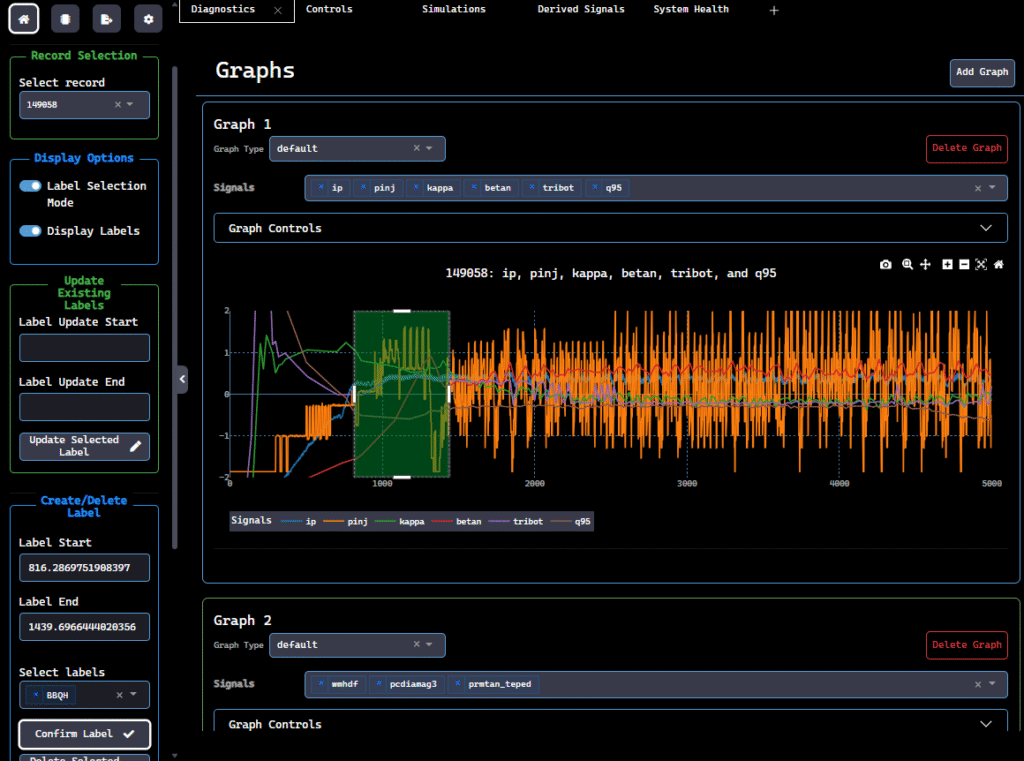

Label dynamic behaviors, transitions, and cross-signal relationships directly from interactive plots. dFL lets you capture complex temporal patterns with precision and context.

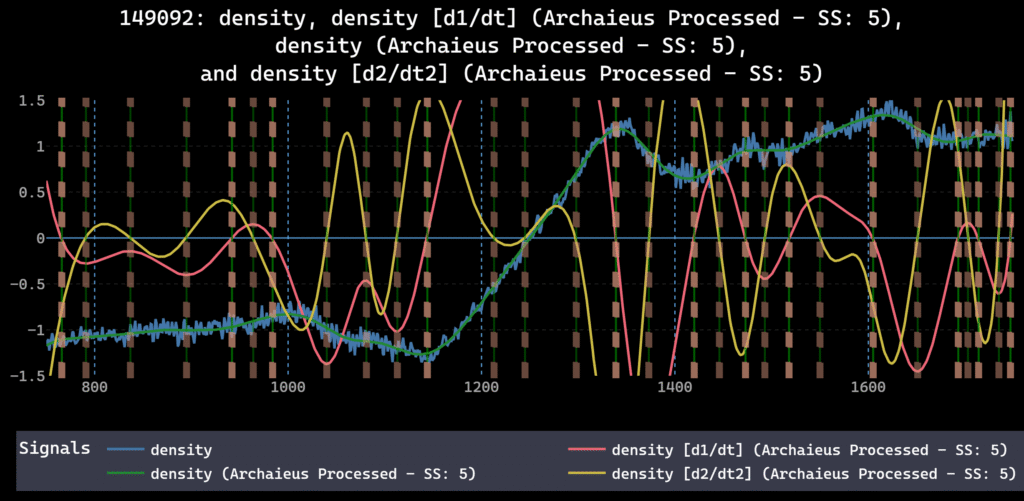

Smooth, trim, fill, or resample your data using drag-and-drop controls. dFL’s visual DSP toolkit lets engineers preprocess sensor streams intuitively while preserving full control over each transformation. Or, develop custom pipelines that plugin seamlessly with dFLs backend.

Use built-in anomaly detectors or plug in your own models. Autolabel spans or events, then refine with human QA. dFL’s active learning loop improves accuracy with every correction.

Export harmonized, labeled datasets in ML-ready formats. dFL preserves every step — from signal processing to tagging — ensuring your data is traceable, shareable, and ready for training or compliance.

Step inside an additive manufacturing workflow where high-frequency sensor data actually turns into decisions.

In this case study, we use dFL by Sophelio on a NIST Laser Powder Bed Fusion dataset to fuse machine commands, real melt-pool signals, and XCT scans into a single, engineer-ready workspace.

dFL’s custom visualizations surface process drifts layer-by-layer, then Python-based autolabelers transform those insights into an automated anomaly detector that flags power deviations across all 250 layers in minutes.

The result: a repeatable path from raw AM telemetry to targeted quality assurance and faster process optimization—without staring at every graph.

In this case study, we use dFL by Sophelio to turn a decade of raw Boston weather records into a clean, ML-ready labeled dataset.

Starting from simple multiyear signals—precipitation, temperature, and pressure—dFL handles harmonization, timestamp alignment, and exploratory visualization in a single workspace.

With a few natural language prompts, we generate an interactive threshold-based precipitation graph and a custom autolabeler that automatically flags high-precipitation intervals across all years.

The result is a fully reproducible pipeline from data fetch to labeled CSV export, ready to drive precipitation prediction models and downstream analytics.

In this case study, we show how the Data Fusion Labeler (dFL) turns raw DIII-D tokamak signals into clean, reusable labels for ELMs and plasma regimes.

Starting from machine control parameters, magnetic probes, and filterscope diagnostics, dFL gives plasma physicists a single workspace to visualize shots, mark events manually, and then scale that knowledge with custom autolabelers.

An in-house ELM detector and an ONNX-based plasma mode model are plugged directly into dFL, enabling bulk autolabeling of ELM bursts and regimes across many shots in one pass.

The result is a fully traceable, ML-ready fusion dataset that supports better QA, faster analysis cycles, and more reliable ELM mitigation and prediction workflows.

Enter Sophelio, where deep data expertise meets modern AI. We specialize in transforming complex, messy, and multimodal datasets into clean, actionable, and scalable intelligence. From scientifically interpretable models to end-to-end MLOps pipelines, our platform and tools are built for teams who know that great AI starts with knowing your data.

Compatible with top ML platforms, data lakes, and custom pipelines, so you stay in flow, not in config. Incorporate any python library into your stack.

The above prices do not include applicable taxes based on your billing address. The final price will be displayed on the checkout page.

All subscriptions renew based on your billing cycle at list price.

The Data Fusion Labeler (dFL) is a unified software framework that integrates data harmonization, data fusion, and provenance-rich labeling for fusion energy research. It transforms heterogeneous, asynchronous, and multimodal datasets—spanning diagnostics, simulations, and control telemetry—into schema-compliant, uncertainty-aware, and reproducible formats. This enables reliable scientific analysis, machine learning, and control workflows

Unlike traditional pipelines that treat transformations as independent or commutative steps, dFL enforces operator-ordering awareness—recognizing that the sequence of resampling, smoothing, or normalization operations fundamentally affects data integrity. Each operator is applied with reproducible context, ensuring transformations preserve phase relationships, units, and provenance. The result is higher-fidelity datasets and more reliable downstream analytics, even with modest models.

Every action in dFL—manual or automated—is recorded in a transparent provenance graph that captures the who, what, when, and why of every transformation. This produces an immutable data lineage, allowing users to regenerate, audit, or merge workflows across teams and institutions. dFL thereby supports FAIR (Findable, Accessible, Interoperable, Reproducible) data principles out of the box, dramatically reducing time lost to uncertainty or rework.

Yes. dFL incorporates a flexible auto-labeling engine capable of applying rules, thresholds, or learned models to detect and annotate relevant events or regimes within continuous data streams. It also supports hybrid, human-in-the-loop labeling, enabling experts to refine or override automated results. This combination accelerates curation of large datasets while maintaining interpretability and scientific control.

dFL is designed for cross-domain adaptability. It runs locally on workstations or scales to cloud and HPC environments, and can interface with SQL, HDF5, Parquet, or REST-based data stores. Because every harmonization and labeling operator is modular, organizations can adapt dFL to applications ranging from industrial monitoring and IoT sensor fusion to financial time-series analytics and AI model training pipelines—anywhere reproducible, multimodal data preparation is required.

Yes. dFL provides open APIs and plugin interfaces for seamless integration with existing data infrastructure—whether relational databases, object stores, or cloud orchestration tools. It supports Pythonic data access, command-line automation, and RESTful endpoints, allowing it to embed directly within modern ETL, MLOps, and DevOps pipelines.

Any domain struggling with heterogeneous, asynchronous, or multiformat data can benefit from dFL. Typical applications include:

Manufacturing and process monitoring (synchronizing sensor and control data)

Finance and econometrics (fusing multi-source time-series)

Healthcare and life sciences (harmonizing multimodal patient or assay data)

Energy and infrastructure (monitoring, forecasting, and anomaly detection)

In essence, dFL provides a universal framework for clean, harmonized, and trustworthy data streams across domains.

dFL maintains full audit trails and versioned exports, ensuring compliance with enterprise and regulatory standards such as ISO 8000, FAIR, and GDPR-style reproducibility and traceability. Each derived dataset can be traced back to its raw source and transformation lineage, empowering organizations to demonstrate data integrity, explainability, and provenance compliance during audits or model validations.

Organizations using dFL typically experience major reductions in data-preparation overhead—often by an order of magnitude—while achieving higher analytical reproducibility and transparency. More importantly, dFL’s harmonization framework enables teams to discover latent correlations and cross-modal patterns that were previously obscured by inconsistent preprocessing or missing context.

dFL is designed for uncertainty-aware data handling from the ground up. Users can embed custom uncertainty models directly into the ingestion layer using the data_provider and fetch_data methods, allowing each signal or feature to carry its own confidence intervals, variance estimates, or measurement noise models. These uncertainties are then propagated automatically through the harmonization pipeline—from trimming and resampling to smoothing and normalization—ensuring that downstream analyses and models retain a physically and statistically coherent error structure. This design enables traceable, physics-informed uncertainty management that reflects the realities of each data source rather than imposing a one-size-fits-all assumption.

Get hands-on with our full-stack ML tooling—label, harmonize, analyze, and export data with scientific precision. No setup, no guesswork, just powerful infrastructure built for data-driven teams. Try for free.